| Return to the Thafknar Support Page |

About Impulse Responses and Convolution

What's this all about?

When your are given an audio signal S and would like it to sound as if it were rendered in a specific room, then there are two options:

- Create a model of that room by measuring the geometries of all its interiors and the reflection characteristics of all surfaces, and let the computer run a simulation of the propagation of the audio waves of S.

- Use an impulse response of the room to transform S like it would have been transformed when rendered in the room. The impulse response can be recorded by playing a test sound, or it can be calculated by a simulation based on a model as in 1.

Here we are concerned with topic 2.

Why and how does this work?

Under usual circumstances (i.e. not extremely high volume levels), the propagation of sound is a linear process. When a sender plays with twice the volume, then the receiver receives twice the volume. When two senders at the same location play two signals, then the receiver receives the sum of these signals.

Consequently, to calculate how a signal S is transformed by a room, resulting in a signal T(S), it is sufficient to know how some 'basic' signal is transformed by the room. If you can decompose S into a linear combination of (appropriately time-translated copies of) the basic signal, then you get T(S) by calculating the corresponding linear combination of the (correspondingly time-translated) transformations of the basic signal.

So what is an impulse response, anyway? And convolution?

When we work with a digital audio signal in the computer, then we deal with a discretised approximation of an analog function, represented by values sampled with some frequency (like 44.100 Hz). In this context, the natural basic signal is one with a single peek value; a function that has value 1 at time 0 and is zero elsewhere. Any sampled function can obviously and trivially be represented as the sum of suitable time-translations of this basic signal.

This test signal with one single peek is kind of a loud click or an explosion sound; this is the impulse. An impulse response (IR) of a given room is how this test signal sounds in this room. To get an impulse response of the room, you record this explosion-like sound in the room. Which, by the way, is why the icon of Thafknar shows a little bomb, propagating acoustical waves, and a microphone.

The mathematical operation that needs to be performed to calculate T(S) from S and the IR is called convolution. Principally, this is an easy operation, but doing it sufficiently fast and doing it continuously on streaming sound data is not so easy. Happily, you don't have to care for this.

The base case: One sender, one receiver – mono in, mono IR, mono out

Following important fact was not emphasised in the previous explanations: An IR is not a universal property of the room; it depends on the sender location (where the impulse was emitted) and on the receiver location (where the impulse response was recorded):

A single-channel impulse reponse is valid only for one position of the sound source and one position of the listener. The convolution result captures the reflection characteristics of the room, but it does not contain stereo information.

This means, with a single-channel IR you can faithfully reproduce how sound (musical instruments, human voices, whatever) emitted at one location in the corresponding room is perceived by one ear or a mono microphone at one location in the room.

The easy case: One sender, two receivers – mono in, stereo IR, stereo out

To get stereo audio, you need two IRs, or an IR with two channels, which must be recorded with a stereo microphone or two mono microphones placed at a suitable distance. Both IRs / both channels have to be recorded in one go, as even small delay and phase differences are essential for a correct stereo experience. Most IRs that you can find in the web are stereo sound files.

Still this is for only one location of the sound source, and it is not for two listeners at independent locations in the room, but for the two ears of one listener at their slightly different positions in space:

A stereo impulse reponse is valid only for one position of the sound source and one position of the listener. The convolution result captures the reflection characteristics of the room, and it contains stereo information that was coded in the IR.

If you would like to simulate the experience of a listener at a different hearing position, then you need a different pair of mono IRs / a different stereo IR.

Practically, the mono input signal is convolved with the left IR to give the left part of the stereo output, and it is convolved with the right IR to give the right part of the stereo output. In other words, these are two instances of the "base" case, one for each ear.

The ideal case: n senders, two receivers – mono in, n stereo IRs, stereo out

Now assume that you have several senders, for example the musicians in an orchestra. This gives n mono input signals, and for a faithful reproduction you need n IRs. The IR for musician x must be created by emitting the impulse at the location of x and recording the IR at the location of the (still one) listener.

A set of n stereo impulse reponses, each recorded with a different sender location, but for the same receiver location, is valid for n sound sources at the respective positions and one position of the listener. The sum of the convolution results captures the reflection characteristics of the room, and it contains stereo information that was coded in the IRs.

If you would like to simulate the experience of a listener at a different hearing position, then you need a different pair of n mono IRs / different n stereo IRs.

Practically, each mono input signal is convolved with the corresponding stereo IR, and the stereo results are added up to give the stereo output sum signal. This corresponds to n instances of the "easy" case, one for each sound source.

The real case: n senders, two receivers – mono in, not n stereo IRs, stereo out

In real life, you do not have n IRs. As stated, you would need an IR for each musician of an orchestra, and for a pipe organ you would have to record an IR for each of the pipes. And this is just for one listener position. Hence, it is not feasible to faithfully simulate the sound in these cases, at least not by the IR convolution method. Often you don't have n input signals anyway, but only a sum signal.

So what can be done? As explained above, in the "ideal" case you convolve a mono audio signal with a stereo IR to get a stereo signal. But if you handle the sound of an orchestra or a pipe organ that way, this would be as if the orchestra or organ sound would be rendered by a single loudspeaker in the room that belongs to the IR. There would be no spatial information corresponding to the various positions of the musicians or the organ pipes.

Luckily, most input signals are present in stereo. So, as a compromise, it is common to convolve the left input channel with the left IR and the right input channel with the right IR. There is no strict physical justification for this, and it does not give a faithful reproduction of the acoustical situation. However, it is a way to retain spatial information of the source signal while at the same time adding the characteristics of the room. The result is pleasing to the ear as it does not sound flat but spatial. Sometimes this is called "Parallel Stereo", see https://www.liquidsonics.com.

"Parallel Stereo" convolution reverb is a way to retain information about the sound sources' locations if you don't have separate sound signals or don't have multiple IRs. It is not a faithful acoustical simulation, but it gives enjoyable results.

Some systems also support an approach called "True Stereo", see above text in the LiquidSonics knowledge base. The name does not make much sense, and there is no physical justification for this either. This method makes use of two IRs to supply a stereo input signal with room information. Like "Parallel Stereo", it does not give a faithful reproduction of the acoustical situation.

"True Stereo" convolution reverb is another way to retain information about the sound sources' locations if you don't have separate sound signals or don't have multiple IRs. It is not a faithful acoustical simulation, but it gives enjoyable results. A pair of stereo IRs is needed.

The convolution modes of Thafknar

The audio in and audio out in Thafknar as well as the IRs are always two-channel. For mono signals, both channels have identical data. Two mode parameters control the handling of the channels and allow the adaption to various situations:

-

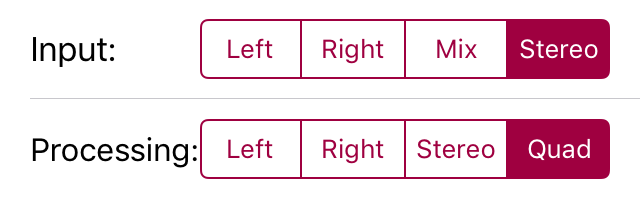

The Input mode controls what's done with the input:

- Left: Take only the left channel.

- Right: Take only the right channel.

- Mix: Take the average of the left and the right channels.

- Stereo: Take both channels.

-

The Processing mode controls how the input is combined

with the IR:

- Left: Convolve the input with the left channel of the IR.

- Right: Convolve the input with the right channel of the IR.

- Stereo: Convolve the input with both channels of the IR.

- Quad: Convolve the input with both channels of two IRs.

For processing mode Quad, the convolutions are done like explained for "True Stereo" in https://www.liquidsonics.com, and the IR files must be named like "<name> L.<extension>" and "<name> R.<extension>". Any one of the two IR files can be selected – Thafknar will automatically load the other file, too. If there is no corresponding second file, then Thafknar will show a warning and use Stereo mode.

Some examples may help to illustrate the usage of these parameters. We refer to the above discussion:

- For the base case where audio in and IR are mono, take Input = Left, Processing = Left.

- For the ideal case where audio in is mono and the IR is stereo, take Input = Left, Processing = Stereo.

- For "Parallel Stereo" where audio in and IR are stereo, take Input = Stereo, Processing = Stereo.

- For "True Stereo" where audio in and (two) IRs are stereo, take Input = Stereo, Processing = Quad.

Example 1 has mono output, the other examples have stereo output. Note that the actual number of convolution operations depends on the parameters like given in this table:

| Input \ Processing | Left | Right | Stereo | Quad |

| Left | 1 | 1 | 2 | 4 |

| Right | 1 | 1 | 2 | 4 |

| Mix | 1 | 1 | 2 | 4 |

| Stereo | 2 | 2 | 2 | 4 |

The combinations with red background do not make sense as the input is mono and so does not contain spatial information to preserve. Furthermore, the same result could be achieved with half the effort by doing Stereo processing with the sum of the IRs.

The Quad setting with its four convolution operations can be quite demanding for your device if you use long IRs. You can try the Parallelise option to speed things up a bit. This option can be found in the Settings view of the Thafknar app or the Thafknar audio unit extension, respectively. Parallelising does not help in case of only one or two convolution operations.